Radar Technology for Smart Surveillance and Environmental Sensing

Tabbed contents

Expertise

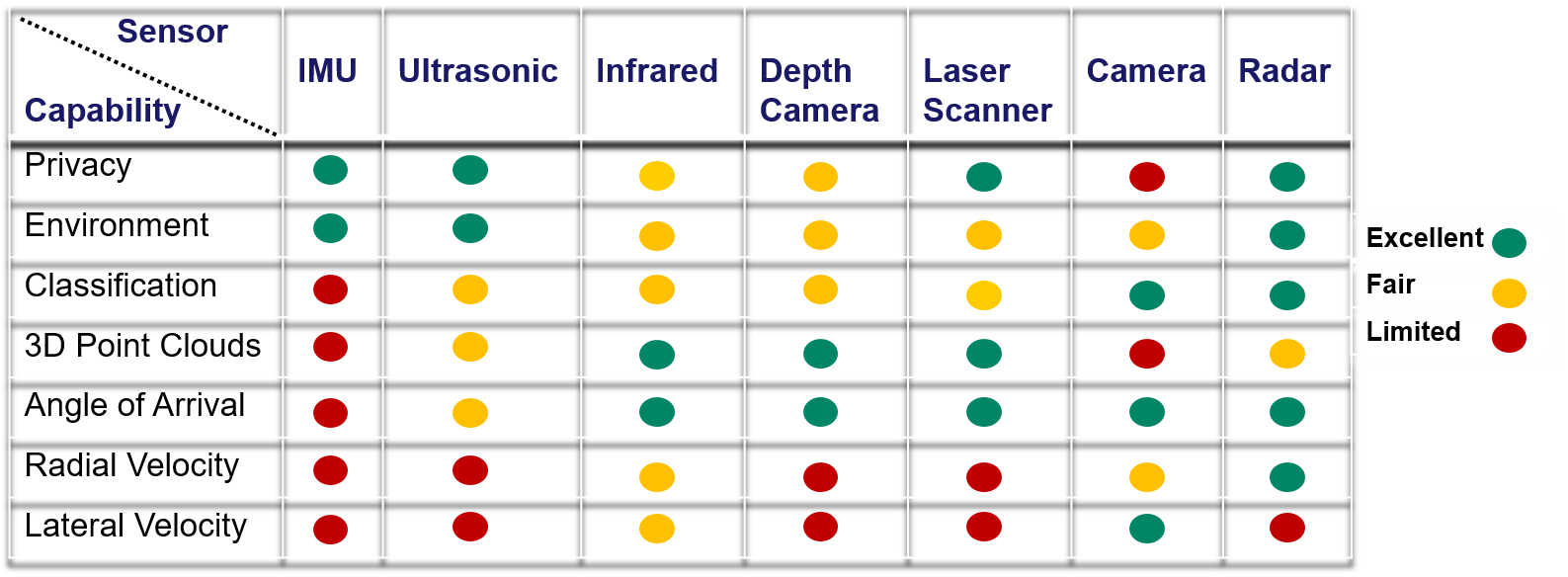

Radar is an attractive sensing technology for human-related applications thanks to its capability to capture human motions in real-time while preserving privacy and to perform under harsh environmental and/or lighting conditions for camera-based sensors, such as in the presence of smoke or dust, as well as in darkness or with direct sunlight. It is in particular well suited to capture the so-called micro-motions associated with human locomotion or vital functions, such limbs motion while walking and chest activity while breathing, making it the sensor of choice in many applications in the fields of smart surveillance and healthcare.

Taking advantage of the recent developments in radar sensor manufacturing in terms of processing power, real-time performance and size-efficiency, our team has investigated the feasibility of the integration of radar sensors and their usage for smart surveillance and/or environmental sensing in different real-life scenarios (in public places, in industrial context as well as for healthcare applications). In many cases, classical radar processing approaches are supplemented with machine learning techniques, leveraging the highly descriptive content of the sensor signal. When required, the radar sensor was integrated together with other sensing technologies into a multi-modal perception system.

What we offer:

- Feasibility studies in use-case scenarios that require human interaction.

- Consultancy on radar hardware selection and customization based on the application constraints.

- Professional lab tests for problem evaluation, together with our experts in human locomotion.

Related projects

Covid-19 Access Checker

Figure 2 Radar-based solution to capture chest micro-motions and monitor the breathing rate without contact in a standing position

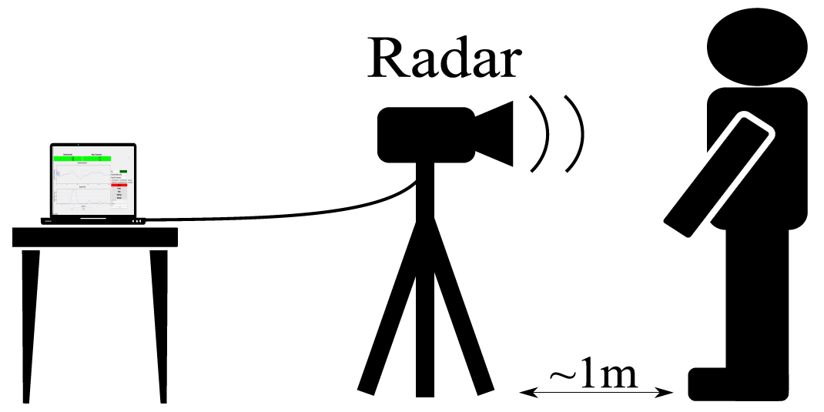

Figure 2 Radar-based solution to capture chest micro-motions and monitor the breathing rate without contact in a standing positionThe radar capability of capturing the body micro-motions can used to capture the chest movement remotely. This allows to estimate vital signs (such as of breath and heart rates) in real-time and without contact with the human subject. In this context, we developed for the Spanish Company ITURRI an algorithm to extract the chest micro-motions from the radar signal and estimate the breath rate while the subject is standing, at a distance of 1 to 2 m from the radar. The radar sensor was combined with a thermal camera in order to additionally measure the body temperature remotely. With this multi-modal sensor system, coined Access Checker, critical vital parameters of visitors can be measured without contact before granting them access to public places such as airports or hospitals.

Smart environmental Sensing for adaptive Prosthetic Limbs

The capability of radar sensors to detect the distance of the targets in the sensor field of view has been used so far in numerous applications (e.g. autonomous driving) requiring the dimensioning of the targets of interest. Using the scanning capability of Multiple-input multiple-output (MIMO) radar sensors, the positions of multiple targets in a plane can be tracked in real-time. Mounting the radar sensor on a mobile platform, this ability can be used for instance to generate a two-dimensional map of the targets in the sagittal plane, from which the dimensions of static objects such as stairs can be extracted. Using this approach, we developed a perception system including a MIMO radar and an IMU that can be potentially integrated in any robotic mobile system to scan and map objects in the sagittal plane. One possible application lies in the field of smart environmental sensing for adaptive prosthetic legs, to support automated obstacle traversing. In this context, we developed and validated a real-time, computationally efficient approach for stair dimensioning. Our results were presented at the EUSIPCO2021.

Towards Safe Human-Robot Collaboration

Different moving targets are captured by the radar with unique signatures on both the velocity and range dimensions. These can be used in smart surveillance applications where the ability to distinguish human targets from other moving targets is required, such as for safe Human-Robot Collaboration. Using this idea, we developed a technique based on a shallow neural network able to differentiate humans from moving robots to monitor the presence of humans in a given area. This approach can be used for instance to monitor safety areas around a stationary robot, which must adapt its behavior in the presence of a human operator but not in the case of an approaching AGV. Our results were published in 2018 in the IEEE Radar Conference (RadarConf’18).

Human Identification

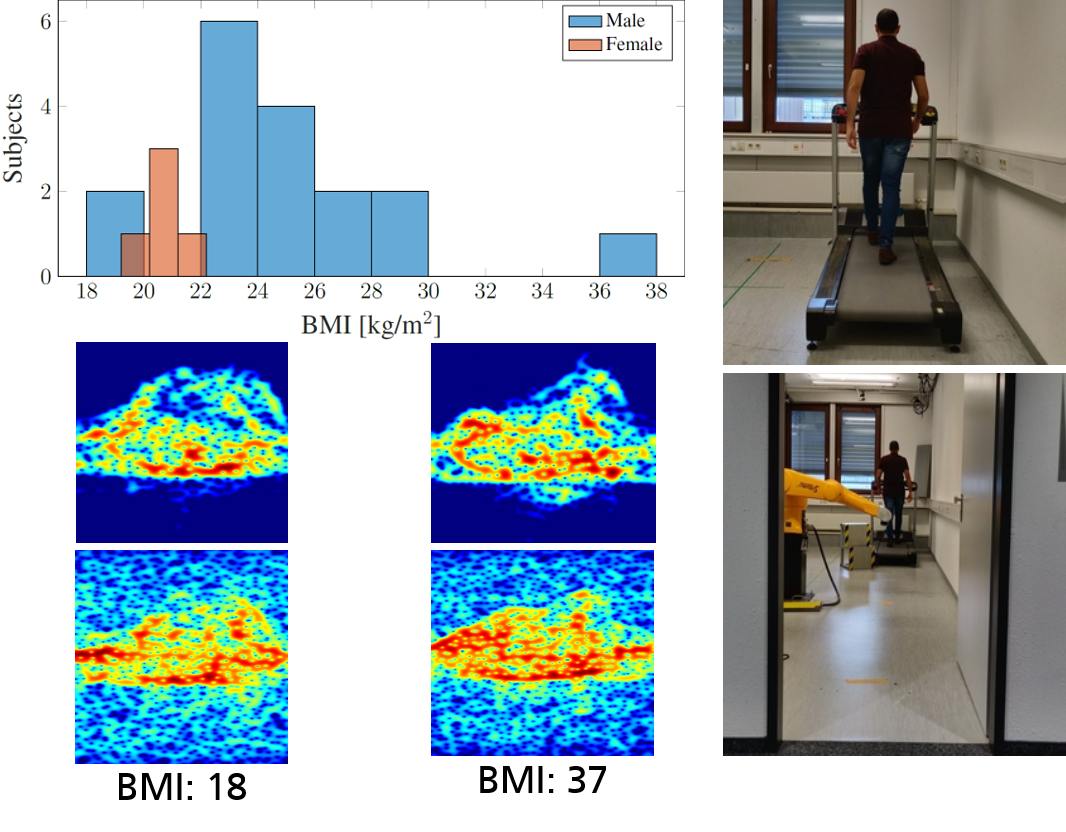

Figure 3 A high accuracy of identification of 22 human subjects based on half gait cycle was achieved using the signals captured when the subjects walked on a treadmill (the signals are showed in both optimal (top) and noisy (bottom) cases for two different BMI)

Figure 3 A high accuracy of identification of 22 human subjects based on half gait cycle was achieved using the signals captured when the subjects walked on a treadmill (the signals are showed in both optimal (top) and noisy (bottom) cases for two different BMI)Human identification is a required function in many security applications, e.g. monitoring home entrance. Relying only on camera systems has serious shortcomings in either very dark or bright lighting conditions, as well as in the presence of smoke and/or dust hampering visibility. Moreover, cameras are also problematic from the point of view of respecting privacy of passers-by in the street and neighbors. On the other hand, radar sensors do not suffer from these limitations and can be used in such applications to capture micro-motion signatures specific to each human on real-time basis. In this context, we conducted a study using on a deep neural network to differentiate between 22 different subjects based on their walking signatures. It also showed a direct correlation between the body mass index (BMI) and the walking style. Our results were published in 2019 in the IEEE Radar Conference (RadarConf’19).

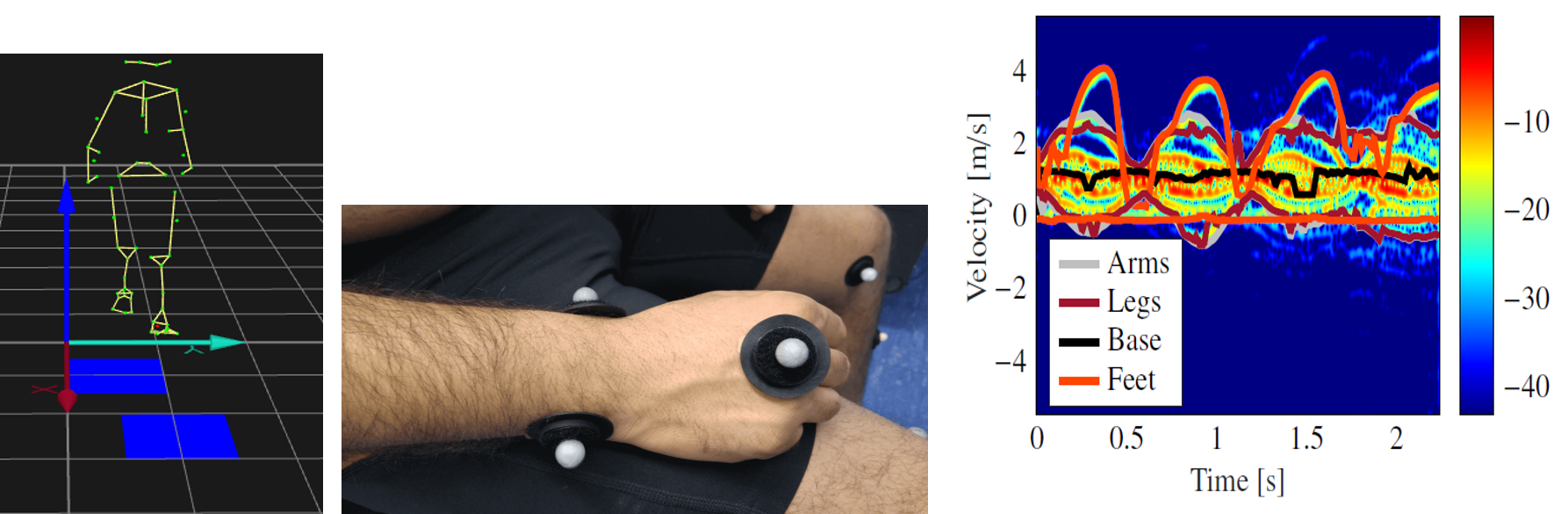

Human Micro-Motion Analysis

The unique micro-Doppler signature (μ-D) of the human body motion can be analyzed as the superposition of the μ-D signatures of the different body parts. Extraction of human limbs μ-D signatures in real-time can be used to detect, classify and track human motion especially for safety application. We used our Motion Capture system to develop a realistic simulation model of the expected radar signals. Leveraging this model, we could achieve limbs trajectories decomposition in real-time using machine learning to differentiate four main classes (trunk – arms – legs – feet). Our results were published in 2017 in the IEEE Radar Conference (RadarConf’17).