Center for Cognitive Robotics

The Center for Cognitive Robotics extends the research and innovation expertise of Fraunhofer IPA by implementing Artificial Intelligence (AI) in the research fields of robotics and human-machine-interaction.

In combination with low data AI methods, robotics as a physical form of AI in real applications is used to enhance the performance and autonomy of robot systems within the sense of “automating automation”. It is also employed to master complex tasks. To achieve successful man-machine interaction, the Center develops solutions that enable the technology to be universally applied. Industrial robots and service robots gain the cognitive ability to perceive their environments and to derive appropriate actions as a result. They “understand” how to solve a task, or learn by imitating the human model.

The Center helps companies fully exploit the potential of service and industrial robots. It also contributes to opening up new fields of application beyond the realms of manufacturing, as well as to finding answers to megatrends such as demographic change, personalization, sustainability and digitization. Technological developments are emerging that facilitate the entry in robotics, support the further development and improvement of existing applications and allow them to be organized more efficiently. In a nutshell, the Center offers technologies which:

- reduce the effort involved in programming robots,

- increase the availability of robot systems,

- automate the set-up for gripping and machining processes,

- facilitate the usability of service robots

- and improve the autonomy of robots.

Through the joint project with the AI Innovation Center entitled “Learning Systems”, the IPA scientists also draw on the expertise of Cyber Valley in fundamental AI research.

Our expertise

Self-learning CPR

Application & Use

In order to compete on a global scale, companies need to manufacture their products efficiently despite ever-smaller lot sizes. Robots could play a key role in this regard. So far, programming robots is a time-consuming job that can only be done by experts. As a result, robots are only cost-effective if they are used for large lot sizes. The goal of “self-learning cyber-physical robot systems” is to considerably simplify robot programming via machine learning and to automate it to a large extent. The robot explores its environment autonomously and is able to deduce and optimize its future behavior. This learning process takes place in a physical simulation environment where the real production environment is modeled as a digital twin. Consequently, the real production process can continue uninterrupted and the robot can be put into operation faster.

Technology

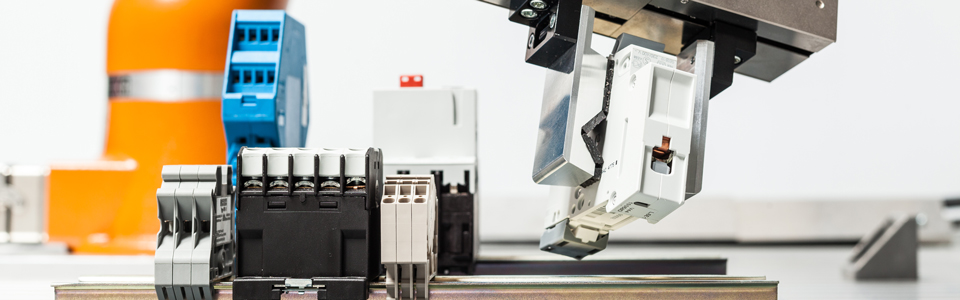

The project implements an integrated physical simulation which allows assembly processes to be realistically simulated via snap fits. A machine-learning framework functions as a software framework for the robot simulation. The expert knowledge about robot programming and about the assembly task to be carried out is provided via predefined robot skills as well as an extended simulation environment in the form of a detailed joining model. The developed technology is then transferred from the simulation to reality and evaluated in two hands-on steps – joining objects to shafts, i.e. the peg-in-hole process, and joining control cabinet terminals – to conclusively implement initial applications for control cabinet installations.

Self-optimizing CPR

Application & Use

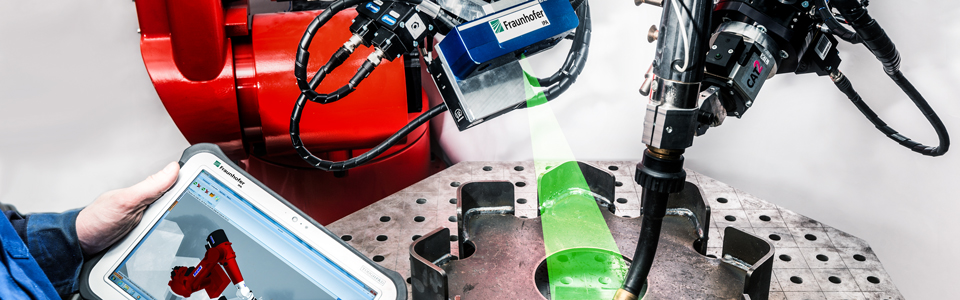

The need for automation in welding is high and the additional lack of skilled personnel demand for welding robots makes this even more acute. However, robots are rarely cost-effective when it comes to small lot sizes. Therefore, Fraunhofer IPA is developing a range of components to simplify the use of welding robots and to make them more cost-effective. This also includes improving and extending sensor concepts and algorithms for collision-free welding trajectory planning.

Technology

Apart from using 3D-sensor technology to localize components and detect discrepancies in the assembly process, in this project a 2D-line scanner add-on is being developed for collaborative welding robots (“cobots”). The add-on makes it much easier to program the welding seam with affordable cobots such as UR10e. To achieve this, the welding robot needs to be brought to the start position of the seam. Thanks to the upstream line scanner, the robot is able to follow the welding seam autonomously. In contrast to 2D-sensor solutions, this technology is not limited to online adjustments of the programmed welding seam or a subsequent quality check. It automates the programming of the welding seam itself. This makes the time-consuming, manual teach-in a thing of the past and discrepancies in the seam are automatically taken into account.

Self-healing CPR

Application & Use

With the increasing use of robots in industry, there is a steady need for robots that are able to complete their tasks successfully even in challenging situations. However, they are susceptible to different kinds of error. Currently, when programming an application, programmers have to consider all possible errors – making the job not only more time-consuming but also more complex – in order to write a reliable program. However, errors which have not been dealt with or which crop up unexpectedly cannot be remedied automatically. The best way to correct errors is to shut down the control system safely, although this may increase downtime.

Technology

To combat this problem, the scientists at Fraunhofer IPA are developing a new concept to provide effective assistance with automatic error detection and correction. In the Center of Cognitive Robotics, two main functionalities will be available. One is an error-tolerant program sequence for simple robot applications, which is used to remedy some errors known from experience in production. The other is a pipeline for dealing with errors (error monitoring, diagnosis, and correction), which is used to remedy unknown errors. In the pipeline, AI-based algorithms will automatically diagnose errors and help robots to recover from an error state.

Object Perception

Application & Use

The use of mobile service robots in dynamic and unstructured everyday environments calls for the appropriate flexible perception abilities. However, current perception solutions for robotics are highly optimized special systems that are designed for a specific range of tasks. Consequently, these systems are not universally applicable and can only be used for other purposes with great difficulty. Another challenge involves the highly efficient algorithms that are necessary in order to run the algorithms on mobile systems with limited computation resources. The testbed “Object Perception” demonstrates how these hurdles concerning perception solutions can be overcome.

Technology

Within the scope of the “Object Perception” testbed, a generic and universal object detection system is being developed which supports in particular the use of mobile service robots in unstructured everyday environments. In doing so, the scientists draw on results of extensive groundwork carried out by Fraunhofer IPA in the fields of 2D- and 3D-image processing and object detection for robotics. Important aspects that will increase the flexibility of existing solutions include “flexible multimodality” and a “semantic understanding” of the objects that need to be recognized.

On the one hand, flexible multimodality means processing and merging different sensor modalities of devices such as color cameras and 3D sensors – depending on their availability and the quality of the environment data. On the other hand, various distinguishing features are evaluated, e.g. form, color, texture, label, logos, etc. This enables objects to be generically and reliably described both in manufacturing and in everyday environments.

By understanding objects semantically, it is possible to project functions of parts of objects (“affordances”), for example. In addition, objects are symbolically assigned to form/ color/ texture descriptors. Among other things, these descriptors support the natural verbal interaction and communication of the robot system’s detection decision. This makes it possible to identify and estimate the location of specific, previously known objects (e.g. for commissioning) as well as to detect object categories or abstractly detect previously-unseen objects (“What could that possibly be?”). In particular, this enables object detection without the previous restrictions to textual objects, simple object geometries or use of CAD data.

The generic, universal object detection system with robust and reliable components can thus be easily adapted to a wide range of applications. This will also allow cognitive mobile service robots to safely interact with humans in unstructured environments in the future.

Planning gripping tasks

Application & Use

With most industries, the automated handling and placing of objects has become a key application. The order of the day is a completely automated robotic solution for pick & place systems. However, due to the large variety of workpieces and the fact that they are handled by different types of gripper, there are a number of challenges associated with automating pick & place systems. Moreover, setting up a new workpiece for a pick-and-place system involves not only a lot of time but also calls for expert knowledge of the software. What the industry really needs is a type of software that can be operated by a non-expert and that requires little time to set up a new workpiece. Fraunhofer IPA takes all these problems into account and has developed a compact solution in the form of bp3™ software enabled with machine learning. Machine learning techniques in the software help the user to operate it very easily without the need for additional knowledge or expertise. The new technology not only makes life easier for the user, but also improves the robustness of the pick & place system. The software also offers the user more flexibility by providing both model-based and model-free gripping techniques.

Technology

In the project, the scientists aim to tackle the above-mentioned challenges by applying state-of-the art machine learning techniques, which make the software more intelligent and robust. The project focuses on finding a solution to automating the parameter sets for object detection as well as to automating the generation of gripping points. These technologies make the software more user-friendly, also for non-experts. To make the software more flexible, a model-free gripping technique is being developed, which helps the user apply the software in scenarios where no geometric model of the workpiece is available.

The newly-developed technologies in Fraunhofer IPA’s bin-picking software have shown greater advantages over previous versions. The software currently is undergoing tests to ensure its stability for industrial release. The new version of the software will not only reduce the inhibitions of industrial users who have not yet invested in robot-based bin-picking, but will also generate new market interests through its increased efficiency.

Collision-free manipulation

Application & Use

Mobile service robots with manipulation capabilities are gradually getting a foothold in our everyday environments, for instance by performing fetch and carry tasks. To ensure the safety of humans nearby and to avoid any damage to the surroundings, the robots must be able to detect stationary and moving obstacles and find their way around them. Compared to industrial robots with fixed tasks and safety equipment, interactive robotic manipulators have to be flexible in their movements in order to cope with the dynamics of their surroundings. The aim of the testbed “Collision-free Manipulation” is to develop a new reactive controller for robotic manipulators which allows their collision-free operation in unstructured and cluttered environments.

Technology

The new solution supporting the operation of robotic manipulators in shared human-robot workspaces will be based on the method of Nonlinear Model Predictive Control (NMPC). This enables collaborative tasks to be performed while simultaneously avoiding collisions with any stationary and moving obstacles such as humans. The robot will still move smoothly without any abrupt stops or changes in direction of movement. The reactive controller will be integrated into existing solutions for motion planning and obstacle detection. The motion planner generates a smooth and continuous trajectory from the start to the target destination of the robot. The trajectory consists of several path points along the desired direction of motion. Among other things, the obstacle detection module uses 3D sensors to perceive objects that would collide with the robot on-the-fly and provides information about their geometric location and size. The algorithm also attempts to predict the movement of obstacles and identify potential collisions in advance.

The motion control algorithm continuously tracks trajectory data and information (predicted) about the location of obstacles in real-time. An exponential cost function is used to reliably avoid collisions. Here, the controller minimizes this cost value through reactive movements of the robotic arm (“repulsive force” caused by obstacles). At the same time, deviations from the original trajectory are kept to a minimum by reducing key parameters such as the “contour error” and “lag error” of the robot (“attractive force” caused by the desired trajectory).

The developed method is generic so that it can be easily adapted to different types of robotic arms, as well as for redundant manipulators or the full kinematic chain of a mobile manipulation robot.

Imitation Learning

Application & Use

In assembly, products are often still put together manually, but the interest and demand for a robot-based solution is increasing. Reasons for the low degree of automation include the high investment costs and lengthy setup times for a robot. At present, highly-specialized experts must first painstakingly learn the manual process in order to subsequently convert it into a robot program. The high motoric knowledge possessed by workers about the process, e.g. the force required for a snap-fit to lock into place, is lost. The aim of this testbed is therefore to support the automated creation of the robot program, thus speeding up the setup process.

Technology

To achieve this, our technology starts with the experts themselves, aiming to use the robot programmer’s time efficiently. For this purpose, we take the trajectories and interaction forces occurring during the manual assembly process and use these as a guideline to create a robot program.

By using these trajectories and force data, even complex motoric programs, such as pressing and moving in different directions, can be realized automatically. Divided into meaningful individual skills (e.g. several complex snap-fits), the generated complex programs can also be reused separately if the geometry of the object changes or if other variants need to be automated.

Insight into the project

Privacy warning

With the click on the play button an external video from www.youtube.com is loaded and started. Your data is possible transferred and stored to third party. Do not start the video if you disagree. Find more about the youtube privacy statement under the following link: https://policies.google.com/privacyProgramming robots by demonstrating force-controlled assembly operations